By Gopi Duddi

SVP, Engineering, Couchbase

- AI Will Introduce a Reorganization of Enterprise Engineering

- As engineering teams rapidly adopt AI technologies, enterprises will face a growing risk of AI silos, with different departments implementing varied AI solutions and creating convoluted data architectures across organizations. However, this challenge is driving the emergence of unified AI platforms that will fundamentally reshape how engineering teams operate. Just as database management systems arose to address data silos, these new platforms will serve as abstraction layers across an organization’s AI initiatives, providing a single interface for managing AI resources.

- The most profound change will be in how engineers interact with these systems. Natural language interfaces will increasingly replace specialized query languages, democratizing access to technical systems. Organizations that move quickly to establish unified AI architectures will gain significant advantages, but success will require viewing AI not as just another tool, but as a fundamental layer of the technical stack that requires enterprise-wide coordination and governance.

- As AI assumes a larger role in code development, generating and evaluating code, commits, design documents and other development artifacts, organizational hierarchies will likely flatten. This transformation will reduce the need for multiple layers of pure people management, as AI systems take on more of the technical oversight and quality control functions traditionally managed by middle management layers.

- The Great App Revolution: AI Will Transform Every Enterprise Application

- The rapid adoption of AI tools is forcing engineering teams to radically rethink their approach to data management. Within the next few years, the integration of AI across enterprise applications will generate unprecedented volumes of data – not just from AI processing, but from the cascade of automated workflows, interconnected systems and intelligent features that AI enables.

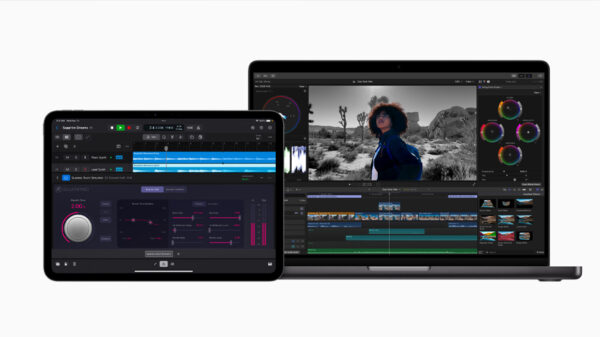

- Consider the evolution of video conferencing platforms: tomorrow’s meeting tools won’t just record and transcribe conversations – they’ll automatically identify action items, notify responsible team members and integrate with other workplace systems to track completion. This multiplication of data touch points will occur across every enterprise application, as previously siloed systems begin communicating and sharing context with each other.

- To handle this data explosion, engineering teams must abandon traditional monolithic architectures in favor of distributed systems that can scale dynamically. Success will require implementing hybrid solutions that balance data across on-premises and multiple cloud regions, while maintaining strict data sovereignty and privacy controls. Teams must also develop expertise in automated data management and cross-application data flow, as the future enterprise ecosystem will demand seamless data sharing between AI-enhanced applications.

- The role of engineering teams will shift from building standalone applications to orchestrating complex data networks that can handle exponential growth in data volume while maintaining performance, security and compliance across the entire organizational workflow.

- Enterprise catalogs will evolve into dynamic agent directories, enabling applications to self-register as AI agents for autonomous discovery and collaboration. This transformation will create a fluid enterprise fabric where AI agents can independently locate and work with one another, fundamentally changing how applications interact and share capabilities across the organization.

- Developer Tools Are About to Make a Quantum Leap Beyond Code Completion

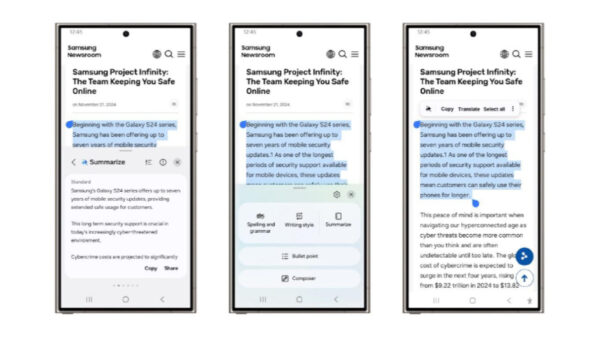

- The next generation of AI-powered developer tools will transform from simple code assistants into comprehensive development partners. While current tools like GitHub Copilot excel at code completion, documentation generation and test artifact creation, we’re on the cusp of a dramatic evolution in developer productivity tools. Within the next year, expect these tools to become proactive development agents that can simultaneously validate code as it’s written, run simulations for edge cases, check for security vulnerabilities and verify data privacy compliance — all before code reaches the main branch. This shift from reactive assistance to proactive validation will fundamentally change how developers work.

- Just as the iPhone revolutionized mobile computing, making BlackBerry’s approach almost instantly obsolete, these new AI-powered development tools will create a similar paradigm shift. Developers who experience these capabilities — having complex security checks, performance optimizations and compliance validations automated in real-time — won’t be able to return to traditional development workflows.

- This evolution is happening at warp speed. Features that would have taken a decade to develop are now being released in months. Organizations that fail to adopt these advanced development tools risk falling dramatically behind in both productivity and code quality. Success in the coming years will depend not just on having these tools, but on building development workflows that fully leverage their capabilities to create more reliable, secure and efficient software delivery pipelines.

- Database Industry Faces Historic Shift as GPUs Challenge 50+ Years of CPU Architecture

- The AI revolution isn’t just transforming applications — it’s poised to fundamentally disrupt database architecture at its core. After half a century of CPU-based database design, the massive parallelism offered by GPUs is forcing a complete rethinking of how databases process and manage data.

- Traditional databases were built around CPU architecture, gradually evolving from single to multi-core processing. These systems learned to chunk data into smaller bits for parallel processing across multiple CPU cores. However, GPUs — capable of running thousands of parallel threads simultaneously — challenge many of these long-standing architectural assumptions.

- The potential for GPU-powered databases is staggering: operations that traditionally required complex CPU-based parallel processing could be executed across thousands of GPU threads simultaneously, potentially delivering ChatGPT-like performance for database operations.

- However, significant challenges remain. GPUs don’t offer the same reliability as CPUs, and reimagining fifty plus years of database innovation for GPU architecture isn’t an easy feat. Yet the potential performance gains may make this transition inevitable in the coming years. Organizations developing cloud database infrastructure must prepare for a hybrid future where GPU acceleration becomes as crucial for database operations as it is for AI workloads. This shift will likely reshape cloud offerings, requiring providers to balance traditional CPU-based services with new GPU-accelerated database solutions.

As Senior Vice President of Engineering, Gopi is responsible for all product development and delivery at Couchbase. He came to Couchbase from AWS, where he served as General Manager of Analytics and Observability. During his tenure at AWS, he oversaw the development of numerous cloud-native databases, notably AWS Redshift and Aurora, and was a founding member of the AWS Glue service and CloudWatch Observability initiatives. Previously, Gopi held senior technical leadership positions at IBM and Informix, leading their flagship database offerings.

Advertisement. Scroll to continue reading.

In this article:Artificial Intelligence, Couchbase, technology, technology adaption, technology investment