Sam Naffziger

SVP, AMD Corporate Fellow, and Product Technology Architect

Over the last two years, generative AI has become a major focus, with billions of people and organizations using AI tools daily. As adoption accelerates and new applications emerge, new data centers are coming online to support this transformational technology – but energy is a critical limiting factor.

In these early stages of the AI transformation, demand for compute will continue to be nearly insatiable. And in the data center, every watt of energy consumed by a chip has an impact on its energy needs, total cost of ownership, carbon emissions and, most importantly, its compute capacity. To continue driving advances in AI and broaden access, the industry must deliver higher performance and more energy efficient processors.

Driving energy efficiency through the 30×25 goal

In 2021, we announced our 30×25 goal, a vision to deliver a 30x energy efficiency improvement for AMD EPYC CPUs and AMD Instinct accelerators powering AI and high performance computing (HPC) by 2025 from a 2020 baseline. We have made steady progress against our goal by finetuning every layer from silicon to software.

Through a combination of architectural advances and software optimizations, we’ve achieved a ~28.3x energy efficiency improvement in 2024 using AMD Instinct MI300X accelerators paired with AMD EPYC 9575F host CPUs, compared to the 2020 goal baseline.

Energy efficient design starts at the architecture level

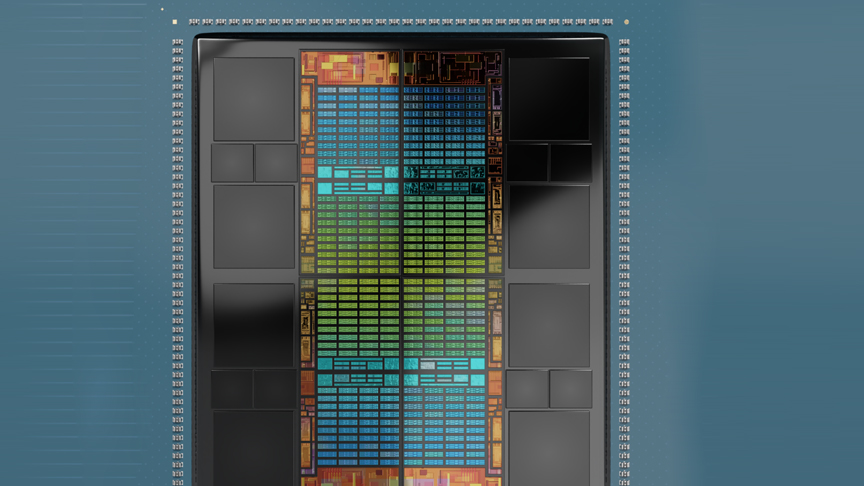

AMD takes a holistic approach to energy efficient design, balancing advancements across the many complex architectural levers that make up chip design, incorporating tight integration of compute and memory with chiplet architectures, advanced packaging, software partitions, and new interconnects. One of our primary goals across all of our products is to extract as much performance as possible while balancing energy use.

AMD Instinct MI300X accelerators pack an unprecedented 153 billion transistors and leverage advanced 3.5D CoWoS packaging to minimize communication energy and data movement overhead. With eight 5nm compute die layered on top of four 6nm IO die, all tightly connected to industry-leading 192GB of high-bandwidth memory (HBM3) capacity running at 5.2 terabytes per second, these accelerators can ingest and process massive amounts of data at an incredible pace.

Microsoft and Meta are taking advantage of these capabilities, leveraging MI300X accelerators to power key services including all live traffic on Meta’s Llama 405B models.

Memory capacity and bandwidth play a crucial role in AI performance and efficiency, and we are committed to delivering industry-leading memory with every generation of AMD Instinct accelerators. Increasing the memory on chips, improving the locality of memory access via software partitions, and optimizing how data is processed by enabling high bandwidth between chiplets can lower interconnect energy and total communication energy consumption, reducing the overall energy demand of a system. These effects multiply across clusters and data centers.

But it’s not just accelerators that impact AI performance and energy efficiency. Pairing them with the right CPU host is critical to keeping accelerators fed with data for demanding AI workloads. AMD EPYC 9575F CPUs are tailor made for GPU-powered AI solutions, with our testing showing up to 8% faster processing than a competitive CPU thanks to higher boost clock frequency.

Continuous improvement with software optimizations

The AMD ROCm open software stack is also delivering major leaps in AI performance, allowing us to continue driving performance and energy efficiency optimizations for our accelerators well after they’ve shipped to customers.

Since we launched the AMD Instinct MI300X accelerators, we have doubled inferencing and training performance across a wide range of the most popular AI models through ROCm enhancements. We are continuously finetuning, and our engagement in the open ecosystem with partners like PyTorch and Hugging Face means that developers have access to daily updates of the latest ROCm libraries to help ensure their applications are always as optimized as possible.

With ROCm, we have also expanded support of lower abstraction AI-specific math formats including FP8, enabling greater power efficiency for AI inference and training. Leveraging lower precision math formats can alleviate memory bottlenecks and reduce latency associated with higher precision formats, allowing for larger models to be handled within the same hardware constraints, enabling more efficient training and inference processes. Our latest ROCm 6.3 release continues to extend performance, efficiency and scalability.

Where do we go from here?

Our high-performance AMD EPYC CPUs and AMD Instinct accelerators are powering AI at scale, uncovering incredible insights through the world’s fastest supercomputers, and enabling data centers to do more in a smaller footprint. We are not taking our foot off the gas – we are continuing to push the boundaries of performance and energy efficiency for AI and high performance computing through holistic chip design. What’s more, our open software approach enables us to harness the collective innovation across the open ecosystem to drive performance and efficiency enhancements on a consistent and frequent cadence.

With our thoughtful approach to hardware and software co-design, we are confident in our roadmap to exceed the 30×25 goal and excited about the possibilities ahead, where we see a path to massive energy efficiency improvements within the next couple of years.

As AI continues to proliferate and demand for compute accelerates, energy efficiency becomes increasingly important beyond the silicon, as we broaden our focus to address energy consumption at the system, rack, and cluster level. We look forward to sharing more on our progress and what’s after 30×25 when we wrap up the goal next year.