AI trailblazers are using Oracle Cloud Infrastructure (OCI) AI Infrastructure and OCI Supercluster to support the development and deployment of production-ready AI, including large language model training for generative AI applications. An influx of AI companies including Modal, Suno, Together AI, and Twelve Labs are deploying OCI AI Infrastructure to accelerate AI training and inferencing at scale.

As the demand for AI continues to surge, AI companies require secure, reliable, high-performance cloud and AI infrastructure that allows them to quickly and economically scale GPU instances as needed. With OCI AI Infrastructure, AI companies have access to high-performance GPU clusters for machine learning, image processing, model training, inference computation, physics-based modeling and simulation, and massively parallel HPC applications.

“AI innovators have a small margin of error when it comes to deploying AI infrastructure,” said Greg Pavlik, senior vice president, AI and Data Management Services, Oracle Cloud Infrastructure. “OCI brings powerful computing capabilities and significant cost savings to a variety of AI use cases and that is why it has become the top destination for leaders in AI.”

Modal, a serverless GPU platform, lets customers run generative AI models, large-scale batch jobs, and job queues without having to configure or set up the necessary infrastructure. To support its rapid expansion to data centers across the world, Modal leveraged OCI Compute bare metal instances for faster and more cost-effective inferencing for its customers.

“OCI’s unmatched price and performance gives us the scale and high performance necessary to run massive AI models without excessive compute costs,” said Erik Bernhardsson, founder and CEO, Modal. “With Modal on OCI, our customers get full serverless execution while truly only paying for what they use.”

Suno is a leading generative music company whose flagship product makes realistic, personalized music in seconds. Suno chose OCI Supercluster to train its proprietary machine learning models and support growing demand for its next generation of generative music models.

“We found that the primary value of partnering with Oracle was around scale,” said Mikey Shulman, founder and CEO, Suno. “Oracle gives us the confidence that as we grow, we are backed by a provider that can grow and scale with us. The new OCI Supercluster management capabilities, including user management, disk management, GPU management, and the ability to add new machines, were instrumental in helping us improve our operations.”

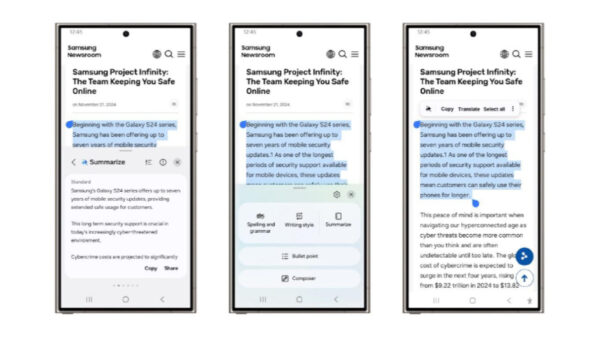

Together AI is a research-driven AI company providing the fastest cloud platform for inference and training of generative AI models. Together AI selected OCI for its strong performance, built-in security, and white glove engineering support.

“Security was top of mind in selecting a cloud provider to support our rapid growth,” said Vipul Ved Prakash, founder and CEO, Together AI. “OCI’s trusted security and stability has helped us rapidly scale to support growing demand from startups and enterprise customers adopting our Inference, Fine-tuning, and Training solutions for their industry-leading performance.”

Twelve Labs is an AI startup building foundation models for multimodal video understanding, enabling users to use natural language to search videos for specific scenes, generate accurate and insightful text about videos through prompting, and automatically classify videos based on custom categories. OCI Compute bare metal GPUs and the high-internode bandwidth OCI provides allow Twelve Labs to train large-scale models at high speeds.

“OCI AI Infrastructure allows us to train our models at scale without compromising quality or speed,” said Jae Lee, founder and CEO, Twelve Labs. “OCI provides the performance, scalability, and cluster networking we need to further advance video understanding, while significantly reducing the time and cost necessary to deploy our AI models.”

OCI AI Infrastructure offers several capabilities that enable AI companies to innovate faster

OCI Compute virtual machines and bare metal GPU instances can power applications for computer vision, natural language processing, recommendation systems, and more. OCI Supercluster provides ultra-low latency cluster networking, HPC storage, and OCI Compute bare metal instances to train large, complex models, such as large language models, at scale. Oracle’s dedicated engineering support team works with customers through the entire deployment, from planning to launch, to help ensure success.