The cloud enabled access to ML for more users, but until a few years ago, the process of building, training, and deploying models remained painstaking and tedious, requiring continuous iteration by small teams of data scientists for weeks or months before a model was production-ready.

Amazon SageMaker launched five years ago to address these challenges, and since then AWS has added more than 250 new features and capabilities to make it easier for customers to use ML across their organization. Globally, tens of thousands of customers of all sizes and across industries rely on Amazon SageMaker.

AWS unveiled eight new capabilities for Amazon SageMaker, its end-to-end ML service. These include:

- New geospatial capabilities in Amazon SageMaker make it easier for customers to make predictions using satellite and location data – Amazon SageMaker now accelerates and simplifies generating geospatial ML predictions by enabling customers to enrich their datasets, train geospatial models, and visualize the results in hours instead of months. Customers can use Amazon SageMaker to access a range of geospatial data sources from AWS (e.g., Amazon Location Service), open-source datasets (e.g., Amazon Open Data), or their own proprietary data including from third-party providers (like Planet Labs).

- Accelerate collaboration across data science teams – Amazon SageMaker now gives teams a workspace where they can read, edit, and run notebooks together in real time to streamline collaboration and communication. Teammates can review notebook results together to immediately understand how a model performs, without passing information back and forth.

- Simplified data preparation – Amazon SageMaker Studio Notebook now offers a built-in data preparation capability that allows practitioners to visually review data characteristics and remediate data-quality problems in just a few clicks—all directly in their notebook environment.

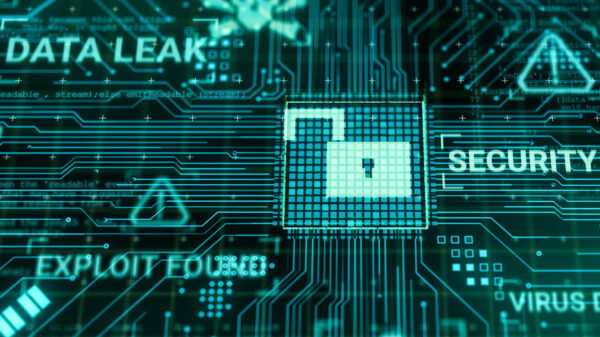

- Amazon SageMaker Role Manager makes it easier to control access and permissions – Amazon SageMaker Role Manager makes it easier for administrators to control access and define permissions for users, reducing the time and effort to onboard and manage users over time.

- Amazon SageMaker Model Cards simplify model information gathering – Amazon SageMaker Model Cards provide a single location to store ML model information in the AWS console, streamlining documentation throughout a model’s lifecycle.

- Amazon SageMaker Model Dashboard provides a central interface to track ML models – Provides a comprehensive overview of deployed ML models and endpoints, enabling practitioners to track resources and model behavior in one place.

- Automated validation of new models using real-time inference requests – To make it easier for practitioners to compare the performance of new models against production models, using the same real-world inference request data in real time. Now, they can easily scale their testing to thousands of new models simultaneously, without building their own testing infrastructure.

- Automatic conversion of notebook code to production-ready jobs – Amazon SageMaker Studio Notebook now allows practitioners to select a notebook and automate it as a job that can run in a production environment.

New database and analytics capabilities that are making it faster and easier for customers to query, manage, and scale their data

Organisations today create and store petabytes—or even exabytes—of data from a growing number of sources (e.g., digital media, online transactions, and connected devices). To maximise the value of this data, customers need an end-to-end data strategy that provides access to the right tools for all data workloads and applications, along with the ability to perform reliably at scale as the volume and velocity of data increase.

AWS announced five new capabilities across its database and analytics portfolios that make it faster and easier for customers to manage and analyse data at petabyte scale. These include:

- Amazon DocumentDB Elastic Clusters power petabyte-scale applications with millions of writes per second – Previously, customers had to manually distribute data and manage capacity across multiple Amazon DocumentDB nodes. Amazon DocumentDB Elastic Clusters allow customers to scale beyond the limits of a single database node within minutes, supporting millions of reads and writes per second and storing up to 2 petabytes of data.

- Amazon OpenSearch Serverless automatically scales search and analytics workloads – To power use cases like website search and real-time application monitoring, tens of thousands of customers use Amazon OpenSearch Service. Many of these workloads are prone to sudden, intermittent spikes in usage, making capacity planning difficult. Amazon OpenSearch Serverless automatically provisions, configures, and scales OpenSearch infrastructure to deliver fast data ingestion and millisecond query responses, even for unpredictable and intermittent workloads.

- Amazon Athena for Apache Spark accelerates startup of interactive analytics to less than one second – Customers use Amazon Athena, a serverless interactive query service, because it is one of the easiest and fastest ways to query petabytes of data in Amazon Simple Storage Service (Amazon S3) using a standard SQL interface. While developers enjoy the fast query speed and ease of use of Apache Spark, they do not want to invest time setting up, managing, and scaling their own Apache Spark infrastructure each time they want to run a query. Now, with Amazon Athena for Apache Spark, customers do not have to provision, configure, and scale resources themselves.

- AWS Glue Data Quality automatically monitors and manages data freshness, accuracy, and integrity – Hundreds of thousands of customers use AWS Glue to build and manage modern data pipelines quickly, easily, and cost-effectively. Effective data-quality management is a time-consuming and complex process, requiring data engineers to spend days gathering detailed statistics on their data, manually identifying data-quality rules based on those statistics, and applying them across thousands of datasets and data pipelines. Once these rules are implemented, data engineers must continuously monitor for errors or changes in the data to adjust rules accordingly. AWS Glue Data Quality automatically measures, monitors, and manages the data quality of Amazon S3 data lakes and AWS Glue data pipelines, reducing the time for data analysis and rule identification from days to hours.

- Amazon Redshift now supports multi-Availability Zone deployments – Tens of thousands of AWS customers collectively process exabytes of data with Amazon Redshift every day. Building on these capabilities, Amazon Redshift now offers a high-availability configuration to enable fast recovery while minimizing the risk of data loss. With Amazon Redshift Multi-Availability Zone (AZ), clusters are deployed across multiple AZs and use all the resources to process read and write queries, eliminating the need for under-utilized standby copies and maximizing price performance for customers.

“Improving data discovery, management, and analysis is imperative for organisations in Asia Pacific to unlock the benefits of machine learning, which can help customers solve even bigger challenges, automate decision making, and drive efficiencies. Today’s announcements are a continuation of our investment to make it faster and easier for our customers to manage, analyse, scale and collaborate on their data and ML projects. As more organisations in Asia Pacific adopt machine learning, we’ll see more breakthroughs in areas such as climate science, urban planning, financial services, disaster response, supply chain forecasting, precision agriculture, and more.” Olivier Klein, AWS Chief Technologist in Asia Pacific and Japan