By Chung Heng Han

Senior Vice President, Systems, EMEA & JAPAC, Oracle

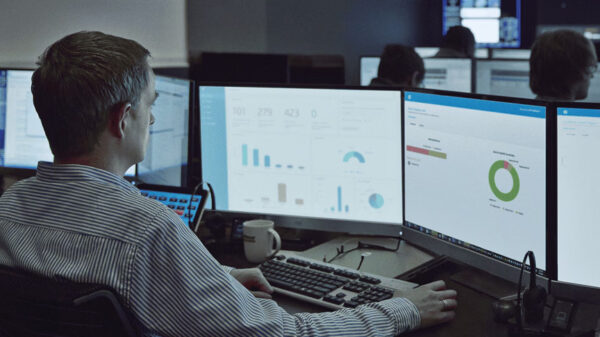

Data is growing at an unprecedented rate. In fact, 90% of the world’s data today were created within the last two years alone, which is mind-blowing. Data is the new oil and the next frontier for innovation. Increasingly, organizations are becoming data-driven as they come to understand the importance of leveraging data, how it helps them work differently, create different business models and prepare to be part of the fourth industrial revolution.

However, it can be tough for a company to access all the data they hold and collect. More often than not, it is locked in separate siloes—both on-premises, and increasingly, on different clouds. A recent Forrester research [1] pointed out that 73% of organizations operate disparate and siloed data strategies, and 64% are still coping with the challenge of running a multi-hybrid infrastructure. No wonder 70% of organizations consider the need to simplify their processes as a high or critical business priority.

A multi-cloud strategy

As organizations seek to break down these siloes and simplify their business, they often turn to the public cloud to help them do this. The public cloud comes with several benefits including improved agility, higher speed to market, faster innovation, elastic scalability, cost optimization, enhanced productivity and data-driven decision-making.

However, the cloud is still in its early days. Analysts estimate that current cloud penetration is still less than 20%, and most of this being for non-critical workloads. In part, this is because no two organizations have the same infrastructure needs, so a generic approach or ‘one-size’ does not fit all. Moreover, it is agreed to be especially hard for critical workloads, such as these valuable data stores.

Therefore, while many CIOs dream of a standardized and unified infrastructure based on one or two strategic vendors, the reality of enterprise infrastructure is that the different elements of today’s key applications, including the databases they run, will be split between disparate public clouds, old-school on-premise resources, and private clouds. According to a recent Gartner[2] survey, 81% of public cloud users are using multiple cloud providers and are running either a hybrid or multi-cloud strategy, or a mixture of both.

Hybrid versus multi-cloud

Hybrid cloud is a concept most companies are increasingly aware of. It is the combination of public infrastructure cloud services with a private cloud infrastructure, generally with on-premises servers running cloud software. While operating independently of each other, the public and private environments communicate over an encrypted connection, either through the public internet or through a private dedicated link.

A multi-cloud, on the other hand, is 100% public cloud, where infrastructure is spread between different cloud providers or within regions on the same cloud.

If you look at multi-cloud, its main advantage is that organizations and application developers can pick and choose components from multiple vendors and use what is best for them for their intended purpose.

For data-driven organizations that are using their data as an asset, the ability to be selective is critical. It can potentially enable them to move corporate data closer to key cloud services, such as high performance compute and new services that allow them to access emerging technologies like artificial intelligence (AI), machine learning (ML), and advanced analytics so that they can build new business models.

Between a rock and a hard place

However, it is still true that some workloads move to the public cloud more easily than others do. While the use of cloud applications is increasingly the norm, moving other workloads into the public cloud poses real issues. In particular, there can be significant challenges when moving critical databases into the generic public cloud. It can introduce business risk, simply because these databases are extremely critical, and it can also bring challenges related to performance, scalability, security, data sovereignty, and unintentional consequences of increased latency that can lead to time-outs and high networking costs.

In addition, they are trying to do this while simultaneously enabling their organizations to embrace some amount of public cloud services, optimally and on their terms. This can make them feel like they are in a difficult situation.

Cloud adjacent architecture

What they need is a model that offers the elasticity of the cloud with the processing power of on-premises IT. A new model, providing ‘Cloud Adjacent Architecture’, can help provide a solution to those not yet willing or able to consider using the public cloud.

In effect, it puts their data on powerful cloud-ready hardware close to the public cloud across a globally interconnected exchange of data centers. This then enables enterprises to interconnect securely to the cloud, as well as other business partners, while also directly lowering latency and networking costs.

By doing this, enterprises can reduce their data center footprint, leverage the scale and variety of modern public cloud services, while still having the control, precision, and data ownership of on-premise infrastructure. This architecture, in particular, offers a strategy for organizations that:

- Are trying to achieve better business and product development agility

- Are trying to get out of the data center business (stepping stone to the cloud)

- Have data sovereignty challenges with public clouds

- Have moved workloads to the cloud and now have created application integration and latency issues

- Have specialized workloads they want to move to the edge of the cloud

- Have performance, scalability and special capability requirements for which cloud providers cannot solve

Moreover, this Cloud Adjacent solution can provide a zero-change architecture meaning companies need to change nothing. Best of all, customers can choose who manages the data—whether it’s themselves, a partner, or a systems integrator—and how it gets done, providing total flexibility and enabling customers to retain control of their data.

This additional flexibility to multi-cloud architectures will help support the further drive towards digital transformation, and we expect to see it give rise to a great many different types of use cases.

Take, for example, smart city initiatives. With the proliferation of sensors, cameras and other types of technologies, our urban infrastructure is changing from being purely physical to including data and technology. The convergence of the digital and physical worlds provides those driving smart cities initiatives with a unique opportunity to understand better the dynamics of a location on a real-time basis and then use insight to provide value back to residents and businesses through the provision of new or better services, often delivered by an app.

However, to do this means harnessing a proliferation of data, which given the number of parties involved is likely to sit in different systems and even different clouds. This new approach can be used to give the best of both worlds within the multi-cloud deployment, hopefully removing some of the core drawbacks of data complexity and help bring a more rapid rollout of projects that could make a significant difference to all our lives.

[1] https://www.zdnet.com/article/multicloud-everything-you-need-to-know-about-the-biggest-trend-in-cloud-computing/

[2] https://www.gartner.com/smarterwithgartner/why-organizations-choose-a-multicloud-strategy/