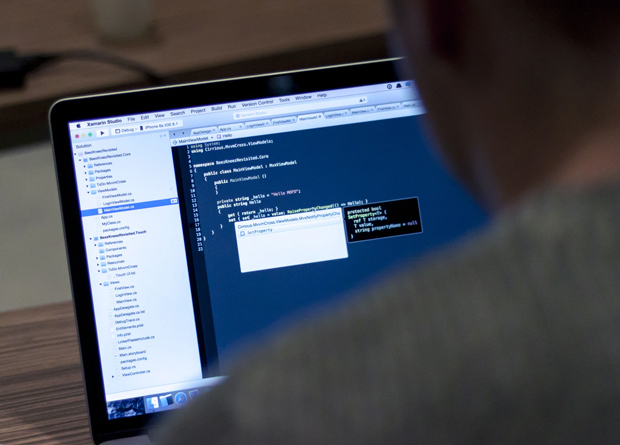

Web scraping produces reams of information that easily can overwhelm you. Just a relatively small-scale scraping of online data can produce tens of thousands of pieces of data. A larger and more standard web scraping effort often produces closer to 20 million bits of data on a daily basis. That is an incredibly large amount of data that easily can overwhelm your business if you are not prepared to cut through it.

Fortunately, quality web-scraping tools can cut through the clutter and render far more useful results. The more data you gain that enables strategically smart decision-making, the greater your potential for long-term success.

The owners of the websites understandably want to thwart bots and nefarious online activity that otherwise might gain access to their websites. Concerns and liability regarding protecting personal information and similarly important data make it important to thwart online incursions that might be used for illegal means. Yet, web scraping is a fully recognized and accepted means of obtaining marketing data and making truly informed and smart business decisions. Your web-scraping tools need to adapt to the changing conditions of the online world and ensure continued access to all the useful data you can get from online sources.

Reliability and Frequency are Critical

Big data fuels quantitative decision-making when you do it correctly. Quantitative data requires a reliable feed with regular frequency to maintain statistical significance. Otherwise, you are just making slightly educated guesses that might lead to bad business decisions. Many websites create obstacles that can thwart the reliability and frequency of web-scraping tools. Obstacles like captchas, ghosting, blocks, and redirects can thwart all but the best of web-scraping tools. Retry errors, request headers, and control proxies all create blocks that your web-scraping tools need to overcome to maintain reliable data acquisition at the frequency needed to achieve significance.

Overcoming Bans, Blocks, and other Barriers

Effective web-scraping tools use proxy management to overcome current and future blocks. They must identify the types of bans, automated retries, and other tools used to thwart automated bots and searches. Using geographic-specific IPs, rotating IPs, and throttle requests can help overcome the barriers created by websites. A reliable rotating proxy provider is the best tool for accomplishing that. It can help identify and overcome the many bans and blocks that your web-scraping tools encounter while trying to maintain the frequency and reliability of data-acquisition. The best ones utilize an array of rotating IPs, geographic-specific IPs, throttle requests, and similar tools that help to identify, remember, and bypass the blocks website owners and operators place online.

Gain and Edge on Your Competitors

When you can overcome those obstacles on a regular basis, you can gain a greater competitive advantage and expand your market share. When you use the top residential, back-connect, and rotating proxies for web scraping, you help to ensure a strong advantage over your competitors. You also gain more useful and insightful data that enables smarter strategic business planning. That can help you strengthen your current markets while growing others.