David Bennett, AMD APJ Mega-Region VP

In today’s hyper-connected world, technology buyers can find an answer to almost any question with a web browser and an internet connection. We can all do a little research before we sign the check or hand over the credit card. In the personal computer market, performance benchmarking has been an important part of evaluating computers for years, but what do benchmarks really tell us and which ones can we rely on?

History shows the way computers have been evaluated continually changes. For decades computers were primarily sold on the basis of the clock frequency of its processor. AMD was, in fact, the first processor company to 1 GHz in 2000, but as the frequencies rose higher and more architectural differences were introduced, the link between clock frequency and performance experienced by the user became increasingly tenuous. Additionally, increases in power consumption and decreases in performance scaling with clock speed ultimately killed clock speed as a performance measurement. Microprocessor core counts became the next marketable way many mainstream users were sold computers, with AMD demonstrating the first x86 dual-core processor in 2004 and the first native quad-core x86 server processor in 2006.

Benchmarks were developed to help take the guess work out of how much frequency or core counts really deliver in terms of performance, and to provide objective guidance from parties outside of the hardware ecosystem itself. As these software companies maneuvered to become the gold standard of benchmarking against each other, cracks in the model began to appear with hardware companies fighting for optimizations to achieve the highest score resulting in diminished credibility of frequency as a measure of performance.

As processor architectures have evolved, some benchmark suites have not evolved with them; yet they remain a staple to decision makers when judging the performance of computers. To serve today’s PC user, there is a need to tap into previously underutilized compute resources which are available in the form of graphics processing units (GPUs) and their massive parallel compute capabilities. Modern processors, like the Accelerated Processing Unit (APU), have both Central Processing Unit (CPU) and GPU processing engines as well as specialized video and audio hardware, all working together to contribute to the user’s experience and process modern workloads efficiently for outstanding performance with minimal power consumption.

Users today expect a rich visual experience and engage with computers like never before; consuming, creating, integrating and sharing high quality audio and video while interacting with their computers through touch, voice and gesture. There is seemingly less interest in what is happening behind the screen and more of an expectation that the system will just work to provide a great experience whether at home or at work. Systems now can include 64-bit multi-core APUs such as AMD’s A-Series APUs . These advanced APUs utilize the latest CPU and GPU technologies in heterogeneous system architecture (HSA) optimized designs that allocate the software work to the processing engine best suited for the task. HSA was developed to work in conjunction with new programming models and languages like OpenCL and C++AMP to optimize compute capabilities. While computing has reached levels with traditional microprocessor advancements where higher clock speeds require increased power and typically provide diminished rates of return on performance, HSA design is an improved approach to enabling the experiences that people expect today and tomorrow.

Given this seismic change in usage and user expectation, you would think that benchmarks would have changed to reflect the technology and user’s needs and expectations. The sad reality is that many benchmarks haven’t. Measuring just one task or one type of processing, such as single-core CPU performance, these benchmarks provide a limited view of system performance that does not easily translate to an evaluation of the experience of using the system the user is concerned with.

Is it valid to base buying decisions on benchmarks that only measure one aspect of the processor or are more heavily weighted toward a single, rarely used application? When buying a car is horsepower the only specification you consider on the window sticker at the dealership? Ultimately you are the best judge of what is good for you; in an ideal world, hands-on evaluation of a computer can determine if it will either satisfy your needs or it won’t, simple as that.

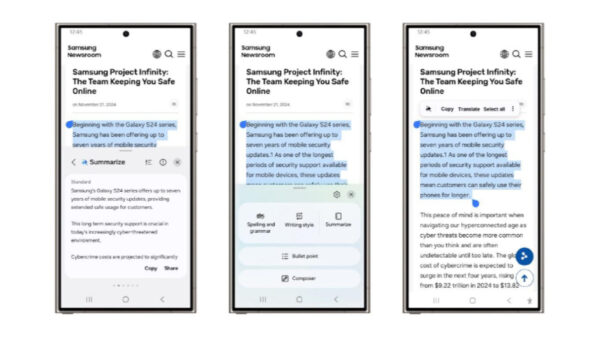

In today’s online world, hands-on evaluation of a PC is not always realistic, so benchmarks still have an important role to play. There are currently three benchmarks we believe provide a well-rounded picture of modern compute architectures based on the typical workload of today’s consumer and commercial users. Two of them are produced by Futuremark, an organization that is open to the whole computing industry. Its recent PCMark 8 v2 benchmark suite was developed as the complete PC benchmark for home and business in collaboration with many of the industry’s biggest names including Dell, HP, Lenovo, Microsoft and many semiconductor manufacturers. To gain a fuller view of system performance, a suite of modern benchmarks can be used adding the likes of Futuremark 3DMark for graphics and GPU compute performance and Basemark CL from Rightware for total system compute.

Creating high-quality benchmarks that truly represent today’s workloads and provide an accurate picture to users’ needs should be inclusive of the whole industry. This is a view shared by industry experts, such as Martin Veitch of IDG Connect:

“We need experts who can measure the right things and be open and even-handed. Otherwise, we stand, as individuals and corporations, to waste vast sums: this applies to the private sector but also government and the public sector where tendering documents can often skew choices and lock out some vendors. That’s a situation that is seeing more national and regional groups incorporate Futuremark in tendering documents. Even if they can be controversial and they never stand still for long, one thing is clear: benchmarks are important. And in any high-stakes game, you need somebody trustworthy to keep score.” Martin Veitch, IDG Connect

When the industry does not work together it can result in benchmarks that are not representative of real-world tasks and can be skewed to favor one hardware vendor over another. This has happened before and while one hardware vendor may benefit, the real loser is the consumer who is presented with skewed performance figures and may pay for a perceived performance benefit.

Ultimately, it is the consumer that wins when the industry works together. The European Commission’s recent decision to endorse PCMark and make it a vital part of government procurement procedures is a win not just for Futuremark but those wanting to accurately measure system performance. Users can be assured that PCMark’s reputation is that of an accurate, representative and unbiased benchmark that stands up to the highest scrutiny.

Benchmarks may be a valuable tool for your buying decision process and an important element in your final decision, but at the end of the day, the best and toughest benchmark is you.